- Wake County Public School System

- Student Outcomes

-

Glossary

This list contains commonly used educational and other specialized terms in student outcomes’ reports. Terms are depicted from A to Z; select a letter from the list to move to the terms starting with that letter.

-

A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z

A

Academic Growth: Academic growth is an indication of the progress that students in the school made over the previous year. The standard is roughly equivalent to a year’s worth of expected growth for a year of instruction. Growth is reported for each school as Exceeded Growth Expectations, Met Growth Expectations, or Did Not Meet Growth Expectations as measured by EVAAS, a statistical tool North Carolina uses to measure student growth when common assessments are administered.

ACCESS for ELLs: (Assessing Comprehension and Communication in English State-to-State for English Language Learners). Is North Carolina’s required annual English language proficiency assessment that complies with Title I of the federal Elementary and Secondary Education Act (ESEA) as amended by the Every Student Succeeds Act (ESSA) legislation. See WIDA

Accommodations: Modifications in the way assessments are designed or administered so that students with disabilities (SWD), English Learners (EL), or other test takers who cannot take the original test under standard test conditions can be included in the assessment. Assessment accommodations or adaptations might include Braille forms for blind students or tests in native languages for students whose primary language is something other than English.

Accountability: A means of judging policies and programs by measuring their outcomes or results against agreed-upon standards. An accountability system provides the framework for measuring outcomes - not merely processes or workloads.

Accountability Framework: The North Carolina Department of Public Instruction (NCDPI) accountability framework includes the following key measures:

-

Student performance on the required end-of-grade and end-of-course tests;

-

Academic growth outcomes for all schools;

-

English Learner Progress toward English language attainment as measured by the ACCESS for ELs assessment;

-

Math Course Rigor for high school students;

-

ACT and ACT WorkKeys assessment results; and

-

Four year Cohort Graduation Rate

These indicators are then used to determine the following Accountability outcomes:

-

School Performance Grades (SPG) for schools and for school-level subgroups;

-

Long-term goals for schools to improve achievement and reduce performance gaps;

-

School designations of Comprehensive Support and Improvement Schools as well as Targeted Support and Improvement Schools

-

Low-Performing Schools and Districts

Achievement Data: Any data that reflect students academic attainments. Formative data are collected throughout the year and are used primarily to drive instruction; summative data are collected at the end of a cycle and are generally used to assess overall outcomes and examine areas for improvement. Benchmark data are like summative data, but are collected more frequently to determine progress toward summative goals.

Achievement Levels: To better report students’ career and college readiness, the North Carolina Department of Public Instruction uses a four-level achievement scale:

- Not Proficient: Students who are Not Proficient demonstrate inconsistent understanding of grade-level content standards and will need support.

- Level 3: Students at Level 3 demonstrate sufficient understanding of grade-level content standards though some support may be needed to engage with content at the next grade/course.

- Level 4: Students at Level 4 demonstrate a thorough understanding of grade-level content standards and are on track for career and college.

- Level 5: Students at Level 5 demonstrate comprehensive understanding of grade-level content standards, are on track for career and college, and are prepared for advanced content at the grade/course.

For NCEXTEND1 tests, only three achievement levels are reported (i.e., Not Proficient, Level 3, and Level 4).

- Not Proficient: Students who are Not Proficient demonstrate inconsistent understanding of the North Carolina Extended Content Standards and will need significant support.

- Level 3: Students at Level 3 demonstrate sufficient understanding of the North Carolina Extended Content Standards though some support may be needed to engage with content at the next grade/course.

- Level 4: Students at Level 4 demonstrate a thorough understanding of the North Carolina Extended Content Standards and are on track for competitive employment and post-secondary education.

Achievement levels: Predetermined performance standards that allow a student's performance to be compared to grade-level expectations. The column headings on the ISR outline each achievement level and the scale score range associated with each achievement level (See Terms featured on the ISR).

Achievement test: An objective examination that measures educationally relevant skills or knowledge about such subjects as reading, spelling, or mathematics.

ACT WorkKeys: Drill down to ACT WorkKeys on the Assessment Glossary page at:https://www.wcpss.net/Page/52309

Advanced Placement tests (AP): Drill down to Advanced Placement tests on the Assessment Glossary page at: https://www.wcpss.net/Page/52309

Alignment: The process of linking content and performance standards to assessment, instruction, and learning in classrooms. One typical alignment strategy is the step-by-step development of (a) content standards, (b) performance standards, (c) assessments, and (d) instruction for classroom learning. Ideally, each step is informed by the previous step or steps, and the sequential process is represented as follows: Content Standards - Performance Standards - Assessments - Instruction for Learning. In practice, the steps of the alignment process will overlap. The crucial question is whether classroom teaching and learning activities support the standards and assessments. System alignment also includes the link between other school, district, and state resources. Alignment supports the goals of the standards, i.e., whether professional development priorities and instructional materials are linked to what is necessary to achieve the standards.

Alternate Assessments: Ways to assess students academically other than through standard test administration. Alternate assessments are used for some students with disabilities. As an example, NCEXTEND 1 is the alternate assessment from the state for the EOG and EOC tests.

American College Testing (ACT): Drill down to ACT on the Assessment Glossary page at: https://www.wcpss.net/Page/52309

Analytic Scoring: Evaluating student work across multiple dimensions of performance rather than from an overall impression (holistic scoring). In analytic scoring, individual scores for each dimension are scored and reported. For example, analytic scoring of a history essay might include scores of the following dimensions: use of prior knowledge, application of principles, use of original source material to support a point of view, and composition. An overall impression of quality may be included in analytic scoring Holistic Scoring

Anchor(s): A sample of student work that exemplifies a specific level of performance. Raters use anchors to score student work, usually comparing the student’s performance to the anchor. For example, if student work was being scored on a scale of 1-5, there would typically be anchors (previously scored student work), exemplifying each point on the scale.

Anecdotal Data: Data obtained from a description of a specific incident in an individual’s behavior (an anecdotal record). The report should be an objective account of behavior considered significant for the understanding of the individual.

Aptitude: A combination of characteristics, whether native or acquired, that is indicative of an individual’s ability to learn or to develop proficiency in some particular area if appropriate education or training is provided.

Aptitude Test: A test consisting of items selected and standardized so that the test predicts a person's future performance on tasks not obviously similar to those in the test. Aptitude tests may or may not differ in content from achievement tests, but they do differ in purpose. An aptitude test might consist of items that predict future learning or performance; achievement tests consist of items that sample the adequacy of past learning. Aptitude tests include those of general academic (scholastic) ability; those of special abilities, such as verbal, numerical, mechanical, or musical; tests assessing "readiness" for learning; and tests that measure both ability and previous learning, and are used to predict future performance—usually in a specific field, such as foreign language, shorthand, or nursing.

Assessment: Any systematic method of obtaining information from tests and other sources, used to draw inferences about characteristics of people, objects, or programs; the process of gathering, describing, or quantifying information about performance; an exercise—such as a written test, portfolio, or experiment—that seeks to measure a student's skills or knowledge in a subject area.

Assessment System: The combination of multiple assessments into a comprehensive reporting format that produces comprehensive, credible, dependable information upon which important decisions can be made about students, schools, districts, or states. An assessment system may consist of a norm-referenced or criterion-referenced assessment, an alternative assessment system and classroom assessments.

Authentic Assessment: An assessment that measures a student's performance on tasks and situations that occur in real life. This type of assessment is closely aligned with, and models, what students do in the classroom.

Average: A statistic that indicates the central tendency or most typical score of a group of scores. Most often average refers to the sum of a set of scores divided by the number of scores in the set.

Average Daily Membership (ADM): The number of days a student is in membership at a school divided by the number of days in a school month or school year.

B

Baseline: Level of behavior associated with a subject before an experiment or intervention begins.

Battery: A test battery is a set of several tests designed to be administered as a unit. Individual subject-area tests measure different areas of content and may be scored separately; scores from the subtests may also be combined into a single score.

Benchmark: A detailed description of a specific level of student performance expected of students at particular ages, grades, or development levels. Benchmarks are often represented by samples of student work. A set of benchmarks can be used as "checkpoints" to monitor progress toward meeting performance goals within and across grade levels.

Benchmark Assessments: Given to students periodically throughout the year or course to determine how much learning has taken place up to a particular point in time and to track progress toward meeting curriculum goals and objectives.

Bias: A situation that occurs in testing when items systematically measure differently for different ethnic, gender, or age groups. Test developers reduce bias by analyzing item data separately for each group, then identifying and discarding items that appear to be biased.

Biserial Correlation: The relationship between an item score (right or wrong) and the total test score.C

Career-Technical Education Tests: Drill down to Test Information at: https://www.wcpss.net/domain/2340

Ceiling: The upper limit of performance that can be measured effectively by a test. Individuals are said to have reached the ceiling of a test when they perform at the top of the range in which the test can make reliable discriminations. If an individual or group scores at the ceiling of a test, the next higher level of the test should be administered, if available.

Checklist: An assessment that is based on the examiner observing an individual or group and indicating whether or not the assessed behavior is demonstrated.

Classroom Assessment. An assessment developed, administered, and scored by a teacher or set of teachers with the purpose of evaluating individual or classroom student performance on a topic. Classroom assessments may be aligned into an assessment system that includes alternative assessments and either a norm referenced or criterion-referenced assessment. Ideally, the results of a classroom assessment are used to inform and influence instruction that helps students reach high standards.

Closed-Ended Questions: Questions which have a clear and apparent focus and a clearly called-for answer.

College Admissions Test: A test of a student's ability to participate in special programs or advanced learning situations. For example, an honors-level class or a magnet school may require the attainment of high scores on an assessment for admission.

College and Career Ready (CCR) standard: The percent of students who score at a Levels 4 or 5.

College Work Readiness Assessment (CWRA): Drill down to Test Information at: https://www.wcpss.net/domain/2340

Common Core: North Carolina adopted the Common Core State Standards in 2010 as its Standard Course of Study for English language arts and mathematics and began implementation statewide in all public schools in the 2012-13 school year. The Common Core State Standards:

- strengthen academic standards for students;

- were developed by national experts with access to best practices and research from across the nation; and

- allow for a smoother school transition for military students and other students who move during their K-12 schooling

Composite Score: A single score used to express the combination, by averaging or summation, of the scores on several different tests.

Competency: A group of characteristics, native or acquired, which indicate an individual's ability to acquire skills in a given area.

Competency-based assessment (criterion-referenced assessment): Measures an individual's performance against a predetermined standard of acceptable performance. Progress is based on actual performance rather than on how well learners perform in comparison to others; usually given under classroom conditions.

Confidence Interval (CI): A margin of error around an estimate.

Construct Validity: (see Validity).

Content Validity: (see Validity).

Constructed-Response Item: An assessment unit with directions, a question, or a problem that elicits a written, pictorial, or graphic response from a student. Sometimes called an "open-ended" item.

Content standards: Broadly stated expectations of what students should know and be able to do in particular subjects and (grade) levels. Content standards define for teachers, schools, students, and the community not only the expected student skills and knowledge, but what programs should teach.

Conversion Tables: Tables used to convert a student's test scores from scale score units to grade equivalents, percentile ranks, stanines, or other types of scores.

Correlation: The degree of relationship between two sets of scores. A correlation of 0.00 denotes a complete absence of relationship. A correlation of plus or minus 1.00 indicates a perfect (positive or negative) relationship. Correlation coefficients are used in estimating test reliability and validity.

Criteria: Guidelines, rules, characteristics, or dimensions that are used to judge the quality of student performance. Criteria indicate what we value in student responses, products or performances. They may be holistic, analytic, general, or specific. Scoring rubrics are based on criteria and define what the criteria mean and how they are used.

Criterion-Related Validity: (see Validity).

Cumulative Percent: (see Percentile Rank).

Cut score: A specified point on a score scale, such that scores at or above that point are interpreted or acted upon differently from scores below that point, (see also Performance Standards).

D

Data: A record of an observation or an event such as a test score, a grade in a mathematics class, or response time.

Data Analysis: Identifying patterns/trends among multiple pieces of data.

Data Capture: The electronic survey process used to collect students' end-of-school-year status on K-5 assessments.

Data Displays: Graphs, charts, or tables used to display data trends.

Demographic Data: Descriptive information about a population.

Developmental Scale Scores: The number of questions a student answers correctly is called a raw score. For the grade 3 pretest, the raw score is converted to a developmental scale score. The developmental scale score may be used to measure growth in a specific subject from year to year. The developmental scale score on the grade 3 pretest, given the first three weeks of school, and the developmental scale score on the end-of-grade test, given during the last three weeks of school, allows parents and teachers to measure a student’s growth in a particular subject.

Diagnostic Test: A test used to "diagnose" or analyze; that is, to locate an individual’s specific areas of weakness or strength, to determine the nature of his or her weaknesses or deficiencies, and, if possible, to suggest their cause.

Difference Score Reliability: Reliability of the distribution of differences between two sets of scores. These scores could be on two different subtests or on a pre- and posttest, where the difference score is typically called a gain score. The meaning of the term "reliability" is the same for a set of difference scores as for a distribution of regular test scores, see Reliability. However, since difference scores are derived from two somewhat unreliable scores, difference scores are often quite unreliable. This must be kept in mind when interpreting difference scores.

Difficulty Index: The percent of students who answer an item correctly, designated as p. (At times defined as the percent who respond incorrectly, designated as q.)

Dimensionality: The extent to which a test item measures more than one ability.

Disaggregated Data: Breaking down overall results into smaller groupings. For example, test results are sorted by students who are economically disadvantaged, by racial and ethnic groups, by disability, or English proficiency.

Discipline Data: Any data that reflects student behavior.

Discrimination Parameter: The property that indicates how accurately an item distinguishes between examinees of high ability and those of low ability on the trait being measured. An item that can be answered equally well by examinees of low and high ability does not discriminate well and does not give any information about relative levels of performance.

Discrimination Index: The extent to which an item differentiates between high-scoring and low-scoring examinees. Discrimination indices generally can range from -1.00 to +1.00. Other things being equal, the higher the discrimination index, the better the item is considered to be. Items with negative discrimination indices are generally items in need of rewriting.

Distracters: An incorrect answer choice in a multiple-choice test question.

E

Economically Disadvantaged (ED): Students eligible for free or reduced-price lunch (FRL).

Elementary School Tests: Drill down to Elementary Tests at: https://www.wcpss.net/domain/2340

Embedded Test Model: Using an operational test to field test new items or sections. The new items or sections are “embedded” into the new test and appear to examinees as being indistinguishable from the operational test.

End of Course: Drill down to High School Tests at: https://www.wcpss.net/domain/2340

End of Course Tests: Drill down to Test Information at: https://www.wcpss.net/domain/2340

End of Grade: Drill down to Elementary Tests at: https://www.wcpss.net/domain/2340

End of Grade Mathematics Test: Drill down to Test Information at: https://www.wcpss.net/domain/2340

End of Grade Reading Test: Drill down to Test Information at: https://www.wcpss.net/domain/2340

End of Grade Science Test: Drill down to Test Information at: https://www.wcpss.net/domain/2340

End of Year Summative Math Assessment: Drill down to Test Information at: https://www.wcpss.net/domain/2340

English Language Learner (ELL): Student whose first language is one other than English and who needs language assistance to participate fully in the regular curriculum.

English as a Second Language (ESL): A program model that delivers specialized instruction to students who are learning English as a new language.

Every Student Succeeds Act (ESSA): The Every Student Succeeds Act (ESSA) is a new education law that was signed by President Obama on December 10, 2015 . The ESSA replaces No Child Left Behind. States are required to develop their own ESSA plan to comply with the federal law. The state plan will address issues of school accountability, student assessments, support for struggling schools and other elements. The new law continues to focus on accountability and student-level assessments for all students in reading and mathematics in grades 3-8, science assessments at least once in the elementary grades and at least once in the middle grades, college and career readiness in the high school grades, and being accountable for all student subgroups.

The state’s accountability plan must include goals for academic indicators (improved academic achievement on the state assessments, a measure of student growth or other statewide academic indicator for elementary and middle schools, graduation rates for high schools, and progress in achieving proficiency for English Learners) and a measure of school quality and student success (examples include student and educator engagement, access and completion of advanced coursework, postsecondary readiness, school climate and safety). Participation rates on the assessments must also be included in the plan. More information on ESSA may be found here: http://www.ed.gov/ESSA .

Equivalent Forms: Any of two or more forms of a test that are closely parallel with respect to content and the number and difficulty of the items included. Equivalent forms should also yield very similar average scores and measures of variability for a given group. Also called parallel or alternate forms.

Error of Measurement: The amount by which the score actually received (an observed score) differs from a hypothetical true score, (see Standard Error of Measurement).

EVAAS® (Education Value-Added Assessment System) is a customized software system available to all NC school districts. EVAAS® provides diagnostic reports to district and school staff via a web interface containing various charts and graphs. Users can view reports in EVAAS® that predict student EOG/EOC success, show the relative amount of growth students are making from year to year, or reveal patterns in subgroup performance. For more information about EVAAS see: https://evaas.sas.com .

EVAAS Teacher Effect Score: The EVAAS Teacher Effect score is a conservative estimate of a teacher’s influence on students’ academic progress. The value provided is a function of the difference between the students’ observed (actual) scores and their expected scores. An average teacher would have an estimate of 0.0. For more information, go to: http://www.dpi.state.nc.us/evaas/ . (Definition by SAS)

EVAAS Teacher vs. State Average: The Teacher vs. State Average describes whether there is a statistical difference between the progress of a teacher’s students and the progress of students taught by the average teacher in the state. Comparisons are made based on two standard errors. A designation of “Exceeds Expected Growth, Average Effectiveness” means that evidence that the teacher's students made more progress than the Growth Standard (the teacher's index is 2 or greater). A designation of “Meets Expected Growth, Approaching Average Effectiveness” means that there is evidence that the teacher's students made progress similar to the Growth Standard (the teacher's index is between -2 and 2). A designation of “Does Not Meet Expected Growth. Least Effective: means that there is significant evidence that the teacher's students made less progress than the Growth Standard (the teacher's index is less than -2). For more information, go to: https://ncdpi.sas.com .

Evaluation: When used for most educational settings, evaluation means to measure, compare, and judge the quality of student work, schools, or a specific educational program.

F

Face Validity: (see Validity).

Field Test: A collection of items to approximate how a test form will work. Statistics produced will be used in interpreting item behavior/performance and allow for the calibration of item parameters used in equating tests.

Final Exams (NCFEs): Drill down to Test Information at: https://www.wcpss.net/domain/2340

Floor: The lowest limit of performance that can be measured effectively by a test. Individuals are said to have reached the floor of a test when they perform at the bottom of the range in which the test can make reliable discriminations.

Focus Group: A qualitative data-collection method that relies on facilitated discussions with participants who are asked a series of carefully constructed open-ended questions about their attitudes, beliefs, and experiences.

Foil Counts: Number of examinees that endorse each foil (e.g., number who answer “A”, number who answer “B”, etc.).

Foils: The possible answer choices presented in a multiple-choice question.

Formative Assessments: A process used by teachers and students during instruction that provides feedback to adjust ongoing teaching and learning to improve intended instructional outcomes. The purpose of formative assessments is to assist teachers in identifying where necessary adjustments to instruction are needed to help students achieve the intended instructional outcomes.

Free or Reduced-Price Lunch (FRL): Students are eligible to receive free or reduced-price lunch, based upon parent or guardian financial status through a federal governmental program.

Frequency: The number of times a given score (or a set of scores in an interval grouping) occurs in a distribution or set of scores.

Frequency Distribution: A tabulation of scores from low to high or high to low showing the number of individuals who obtain each score or fall within each score interval.

Frequency Reports: EOG and EOC tests report which list each scale score and the number and percentage of students receiving it.

Full Academic Year (FAY): Student membership of at least 140 days at their current school.

G

Gain Score: Difference between a posttest score and a pretest score.

Goal Summary Report: A summary of EOG and EOC performance on each test by NCSCOS curriculum goal or category. Lists percent of items on a test by goal or category and a comparison of school to state percentage correct. School summaries are more reliable and helpful than teacher summaries.

Grade Norms: The average test score obtained by students classified at a given grade placement, (see Age Norms and Norms).

Grade Level Proficiency (GLP) standard: The percent of students who score at Levels 3, 4 or 5.

Grading: The process of evaluating students, ranking them, and distributing each student's value across a scale. Typically, grading is done at the course level.

Growth: See READY Accountability Measures .

Guessing Parameter: The probability that a student with very low ability on the trait being measured will answer a test item correctly. There is always some chance of guessing the answer to a multiple-choice item, and this probability can vary among items. The guessing parameter enables a model to measure and account for these factors.

H

High School READY Accountability Model Components:

- End-of-Course Tests – Student performance on three end-of-course assessments: English II, Biology and Math I is counted for growth and performance. NCEXTEND1 is an alternate assessment for certain students with disabilities and is included in performance only, not in growth.

- ACT – The percentage of students meeting the UNC system admissions minimum requirement of a composite score of 17.

- Graduation Rates – The percentage of students who graduate in four years or less and five years or less.

- Math Course Rigor – The percentage of graduates taking and passing high-level math courses such as Math III.

- ACT WorkKeys – For Career and Technical Education concentrators (students who have earned four CTE credits in a career cluster), the percentage of concentrator graduates who were awarded at least a Silver Level Career Readiness Certificate based on ACT WorkKeys assessments.

- Graduation Project – The accountability report will note whether a school requires students to complete a graduation project.

High School Tests: Drill down to High School Tests at: https://www.wcpss.net/domain/2340

High-stakes test: A test used to provide results that have important, direct consequences for examinees, programs, or institutions involved in the testing.

Holistic Scoring: A scoring procedure yielding a single score based on overall student performance rather than on an accumulation of points. Holistic scoring uses rubrics to evaluate student performance, (see Analytic Scoring).

Hypothesis: A specific statement of prediction.

I

i-Ready - Mathematics: Drill down to Test Information at: https://www.wcpss.net/domain/2340

i-Ready - Reading: Drill down to Test Information at: https://www.wcpss.net/domain/2340

IPT: This is the state-identified English language proficiency test. Federal and state policies require that all students identified as limited English proficient (LEP) be annually administered this test in grades K-12.

Indicators: Measures used to track performance over time. These can often be classified as input indicators (provide information about the capacity of the system and its programs); process indicators (track participation in programs to see whether different educational approaches produce different results); output indicators (short-term measures of results); or outcome indicators (long-term measures of outcomes and impacts).

Insufficient Data: This term is found on accountability results from state reports, particularly if there were not enough students to identify that student group as a subgroup for accountability purposes.

International Baccalaureate (IB) tests: Drill down to Test Information at: https://www.wcpss.net/domain/2340

Inter-rater reliability: The consistency with which two or more judges rate the work or performance of test takers.

Item: An individual question or exercise in a test or evaluative instrument.

Item Analysis: The process of examining students’ responses to test items. The difficulty and discrimination indices are frequently used in this process, (see Difficulty Index and Discrimination Index).

Item Difficulty: (see Difficulty Index).

Item Discrimination: (see Discrimination Index).

Item Response Theory (IRT): A method for scaling individual items for difficulty in such a way that an item has a known probability of being correctly completed by a respondent of a given ability level. The North Carolina Department of Public Instruction (NCDPI) uses the 3-parameter model, which provides slope, threshold, and asymptote.

Item Tryout: A collection of a limited number of items of a new type, a new format, or a new curriculum. Only a few forms are assembled to determine the performance of new items and not all objectives are tested.

K

K-8 READY Accountability Model Components

- Statewide accountability testing is done in grades 3-8 only. For students in grades K-2, special age-appropriate assessments are used to chart students’ academic progress and are not included in the READY accountability model.

- End-of-grade assessments in reading and mathematics in grades 3-8 and science assessments in grades 5 and 8 are counted for academic growth and performance. NCEXTEND1 is an alternate assessment for certain students with disabilities and is included in performance only, not in growth.

Kindergarten Entry Assessment (KEA): Drill down to Test Information at: https://www.wcpss.net/domain/2340

L

Learning Outcomes: Learning outcomes describe the learning mastered in behavioral terms at specific levels. In other words, what the learner will be able to do.

Learning Standards: Learning standards define in a general sense the skills and abilities to be mastered by students in each strand at clearly articulated levels of proficiency.

Level of Significance: The Type I error rate or the probability that a null hypothesis will be rejected when it is actually true, (see Significance Level).

Likert Scale: A method of scaling in which items are assigned interval-level scale values and the responses are gathered using an interval level response format.

Limited English Proficiency (LEP): The identification given to students who score below Superior in at least one domain on the state-mandated English language proficiency test.

Location Parameter: A statistic from item response theory that pinpoints the ability level at which an item discriminates, or measures best.

Longitudinal: A study that takes place over time.

Low-Performing Schools and Districts: NCDPI staff use data to identify low-performing schools and districts, which, under state law, are based on the School Performance Grade and Education Value-Added Assessment System (EVAAS) growth calculations. Low-performing schools are those that receive a school performance grade of D or F and a school growth score of “met expected growth” or “not met expected growth” as defined by General Statute 115C-105.37. To avoid a low-performing designation, schools must earn a school performance grade of C or better.

M

Mainframe: Data warehouse for demographic student information and reports which include academically gifted (AG), limited English proficient (LEP), free or reduced-price lunch (FRL), and students with disabilities (SWD) groups.

Mantel-Haenszel: A statistical procedure that examines the differential item functioning (DIF) or the relationship between a score on an item and the different groups answering the item (e.g., gender, race). This procedure is used to identify individual items for further bias review.

mCLASS: Drill down to Test Information at: https://www.wcpss.net/domain/2340

Measurement: Process of quantifying any human attribute pertinent to education without necessarily making judgments or interpretations as to its meaning.

Mean: The arithmetic average of a set of scores. It is found by adding all the scores in the distribution and dividing by the total number of scores.

Median: The middle score in a distribution or set of ranked scores; the point (score) that divides a group into two equal parts; the 50th percentile. Half the scores are below the median, and half are above it.

Meta-analysis: A procedure that allows for the examination of trends and patterns that may exist across many different studies.

Middle School Tests: Drill down to Middle School Tests at: https://www.wcpss.net/domain/2340

Mode: The score or value that occurs most frequently in a distribution or set of scores.

Multiple Measures: Assessments that measure student performance in a variety of ways. Multiple measures may include standardized tests, teacher observations, classroom performance assessments, and portfolios.

Multiple-Choice Item: A question, problem, or statement (called a "stem") which appears on a test, followed by two or more answer choices, called alternatives or response choices. The incorrect choices, called distracters, usually reflect common errors. The examinee's task is to choose from, among the alternatives provided, the best answer to the question posed in the stem. These are also called "selected-response items" (see Selected-Response Item).

Multiple Regression: A statistical technique where several variables are used to predict another.

Educational Multirisk: A student within at least 2 of these groups: free or reduced-price lunch (FRL), limited English proficient (LEP), and students with disabilities (SWD).

Multi-tiered System of Support (MTSS): MTSS is a framework which promotes school improvement through engaging, research-based academic and behavioral practices. NC MTSS employs a systems-approach using data-driven problem-solving to maximize growth for all.

N

N: The symbol commonly used to represent the total number of cases in a group.

n: The symbol that represents the sample size of the number of cases in a statistical sample.

NAEP (National Assessment of Educational Progress): The NAEP, also known as the “Nation’s Report Card”, assesses the educational achievement of elementary and secondary students in various subject areas. It provides data for comparing the performance of students in North Carolina to that of their peers in the nation.

NCEXTEND1: The North Carolina EXTEND1 is an alternate assessment designed to measure the performance of students with significant cognitive disabilities using alternate achievement standards.

NCEXTEND2: The North Carolina EXTEND2 is an alternate assessment designed to measure grade-level competencies of students with disabilities using modified achievement standards in a simplified multiple choice format.

NC School Report Card: A snapshot of status information about individual schools produced annually by North Carolina’s Department of Public Instruction (DPI). Add the link to the State Report Card.

Normal Curve Equivalents (NCEs): Normalized standard scores with a mean of 50 and a standard deviation of 21.06, (see Standard Score). The standard deviation of 21.06 was chosen so that NCEs of 1 and 99 are equivalent to percentiles of 1 and 99. There are approximately 11 NCEs to each stanine, (see Stanine).

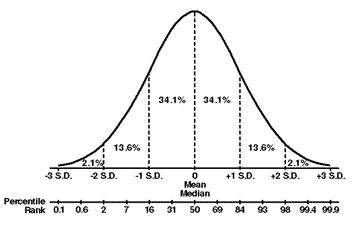

Normal Distribution: A distribution of scores or other measures that, in graphic form, have a distinctive bell-shaped appearance. In a normal distribution, the measures are distributed symmetrically around the mean. Cases are concentrated near the mean and decrease in frequency, according to a precise mathematical equation, the farther one departs from the mean. The assumption that many mental and psychological characteristics are distributed normally underlies the utility of this mathematical distribution.

The figure below is a normal distribution. It shows the percentage of cases between different scores as expressed in standard deviation units. For example, about 34% of the scores fall between the mean and one standard deviation above (or below) the mean.

Norming: The process of constructing norms. Typically norming studies are conducted to construct conversation tables so that an individual’s raw score can be compared to other individuals in a relevant reference group, the norm group.

Norm-referenced assessment: An assessment where student performance or performances are compared to that or those of a larger group. Usually the larger group or "norm group" is a sample representing a wide and diverse cross-section of students. Students, schools, districts, and even states are compared or rank-ordered in relation to the norm group. The purpose of a norm-referenced assessment is usually to sort students and not to measure achievement towards some criterion of performance.

Norms: The distribution of test scores of some specified or reference group called the norm group. For example, this may be a national sample of all fourth graders, a national sample of all fourth-grade male students, or perhaps all fourth graders in some local district. Usually norms are determined by testing a representative group and then calculating the group’s test performance.

Norms vs. Standards: Norms are not standards. Norms are indicators of what students of similar characteristics did when confronted with the same test items as those taken by students in the norms group. Standards, on the other hand, are arbitrary judgments of what students should be able to do, given a set of test items.

Null Hypothesis: A statement of equality between a set of variables.

Number Knowledge Test (NKT): Drill down to Test Information at: https://www.wcpss.net/domain/2340

O

Objective: A desired educational outcome such as "constructing meaning" or "adding whole numbers." Usually several different objectives are measured in one subtest.

OECD Test for Schools: Drill down to Test Information at: https://www.wcpss.net/domain/2340

Open-Ended Questions: Questions that provide a broad opportunity for the participant to respond.

Operational Test: Test is administered statewide with uniform procedures and full reporting of scores, and stakes for examinees and schools.

Other Academic Indicator (OAI): This indicator was used to help determine Adequate Yearly Progress (AYP) Status for public schools and includes attendance (grades K-8) and graduation rates for grades 9-12. Starting in 2012-13 school year, the new READY Accountability Model was implemented.

Outliers: Scores in a distribution that are noticeably much more extreme than the majority of scores. Exactly which score might an outlier is usually an arbitrary decision made by the researcher.

P

Parallel Forms: Covers the same curricular material as other forms, (see Equivalent Forms).

Percent Proficient: The percentage of students who scored at or above grade level for each test.

Percent Tested: Percent of eligible students tested for each test.

Percentile: A point on a distribution below which a certain percentage of the scores fall. For example, if 70% of the scores fall below a score of 56, then the score of 56 is at the 70th percentile.

Percentile Rank: The percentage of scores falling below a certain point on a score distribution. (Percentile and percentile rank are sometimes used interchangeably.)

Performance: (see READY Accountability Measures).

Performance Assessment: (alternative assessment, authentic assessment, and participatory assessment): Performance assessment is a form of testing that requires students to perform a task rather than select an answer from a ready-made list. Performance assessment is an activity that requires students to construct a response, create a product, or perform a demonstration. Usually there are multiple ways that an examinee can approach a performance assessment and more than one correct answer.

Performance Composite: (see READY Performance Composite).

Performance Standards: Performance standards are defined in the following two ways:

- A statement or description of a set of operational tasks exemplifying a level of performance associated with a more general content standard; the statement may be used to guide judgments about the location of a cut score on a score scale; the term often implies a desired level of performance.

- Explicit definitions of what students must do to demonstrate proficiency at a specific level on the content standards.

Performance Task: A carefully planned activity that requires learners to address all the components of a performance standard in a way that is meaningful and authentic. Performance tasks can be used for both instructional and assessment purposes.

Pilot Test: A test that is administered as if it were “the real thing” but has limited associated reporting or stakes for examinees or schools.

Portfolio Assessment: A portfolio is a collection of work, usually drawn from students' classroom work. A portfolio becomes a portfolio assessment when (1) the assessment purpose is defined; (2) criteria or methods are made clear for determining what is put into the portfolio, by whom, and when; and (3) criteria for assessing either the collection or individual pieces of work are identified and used to make judgments about performance. Portfolios can be designed to assess student progress, effort, or achievement, and encourage students to reflect on their learning.

Pre-ACT: Drill down to Test Information at: https://www.wcpss.net/domain/2340

Predictive Validity: The ability of a score on one test to forecast a student's probable performance on another test of similar skills. Predictive validity is determined by mathematically relating scores on the two different tests.

Profile: A graphic presentation of several scores expressed in comparable units of measurement for an individual or a group. This method of presentation permits easy identification of relative strengths or weaknesses across different tests or subtests.

PSAT: Drill down to Test Information at: https://www.wcpss.net/domain/2340

p-Value: The proportion of people in a group who answer a test item correctly; usually referred to as the difficulty index, (see Difficulty Index).

Q

Qualitative Measurement: Collecting information that is not numeric in nature. Qualitative data typically consist of text while quantitative data consist of numbers. Some sources of qualitative data may include written documents (e.g., student assignments), interviews (e.g., focus groups), case studies (e.g., portfolios) and open-ended survey questions or questionnaires.

Quality Tools: Strategies that assist groups to effectively analyze during the continuous improvement cycle.

Quantitative Measurement: Collecting information that is numeric in nature. Quantitative data are those in which the values of a variable differ in amount (in numeric terms) rather than in kind (in descriptive terms). These data can be analyzed using quantitative methods and possibly generalized to a larger population.

Quartile: One of three points that divides the scores in a distribution into four groups of equal size. The first quartile or 25th percentile, separates the lowest fourth of the group; the middle quartile, the 50th percentile or median, divides the second fourth of the cases from the third; and the third quartile, the 75th percentile, separates the top quarter.

Quasi-equated: Item statistics are available for items that have been through item tryouts (although they could change after revisions); and field test forms are developed using this information to maintain similar difficulty levels to the extent possible.

R

Rater: A person who evaluates or judges student performance on an assessment against specific criteria.

Rater Training: The process of educating raters to evaluate student work and produce dependable scores. Typically, this process uses anchors to acquaint raters with criteria and scoring rubrics. Open discussions between raters and the trainer help to clarify scoring criteria and performance standards, and provide opportunities for raters to practice applying the rubric to student work. Rater training often includes an assessment of rater reliability that raters must pass in order to score actual student work.

Rating Scales: Subjective assessments made on predetermined criteria in the form of a scale. Rating scales include numerical scales or descriptive scales.

Raw Data: Data that are unorganized and unaggregated.

Raw Score: A person’s observed score on a test, (e.g., the number of items are answered correctly). While raw scores do have some usefulness, they should not be used to make comparisons between performances on different tests, unless other information about the characteristics of the tests is known. For example, if a student answered 24 items correctly on a reading test, and 40 items correctly on a mathematics test, we should not assume that he or she did better on the mathematics test than on the reading measure. Perhaps the reading test consisted of 35 items and the arithmetic test consisted of 80 items. Given this additional information we might conclude that the student did better on the reading test (24/35 as compared with 40/80). How well did the student do in relation to other students who took the test in reading? We cannot address this question until we know how well the class as a whole did on the reading test. Twenty-four items answered correctly is impressive, but if the average (mean) score attained by the class was 33, the student’s score of 24 takes on a different meaning.

Read to Achieve: The goal of the state’s Read to Achieve program is to ensure that every third grader is reading at or above grade level. Students who are not reading at grade level by the end of third grade receive extra support, including reading camps, multiple opportunities to show proficiency, guaranteed uninterrupted blocks of reading time, and intensive reading interventions so that they will be more prepared to do fourth-grade work.

READY Accountability Background: The READY accountability model includes three components:

- A Standard Course of Study focused on the most critical knowledge and skills that students need to be successful at the next grade level and after high school.

- End-of-grade and end-of-course assessments with rigorous open-ended questions and real-world applications that require students to express their ideas clearly with supporting facts.

- An accountability model that measures how well schools are doing to ensure that students are career and college ready upon high school graduation.

READY Accountability Measures:

- Performance – The percentage of students in the school who score at Achievement Levels 1-5. Achievement Level 3 is considered grade-level proficiency and Achievement Levels 4 and 5 are considered on track to be college and career ready.

- Growth – An indication of the rate at which students in the school learned over the past year. The standard is roughly equivalent to a year’s worth of growth for a year of instruction. Growth is reported for each school as Exceeded Growth Expectations, Met Growth Expectations, or Did Not Meet Growth Expectations. For more information see https://ncdpi.sas.com/

READY Performance Composite: The performance composite is the number of proficient scores on all EOGs and EOCs administered in a school divided by the number of all scores from all EOG and EOC assessments administered.

READY School Accountability Growth: North Carolina uses EVAAS to report school accountability growth values. EVAAS reports whether a school’s students (not individual students) (1) did not meet growth, (2) met expected growth, or (3) exceeded expected growth. Schools meet expected growth when their students make progress that is similar to the progress at an average school in the State. Schools designated as having exceeded expectations have demonstrated that students grew more than students at the average school in the State.

Regression to the Mean: Tendency of a posttest score (or a predicted score) to be closer to the mean of its distribution than the pretest score is to the mean of its distribution. Because of the effects of regression to the mean, students obtaining extremely high or extremely low scores on a pretest tend to obtain less extreme scores on a second assessment of the same test (or on some similar measure).

Relative Achievement Level: Relative achievement levels are a metric that indicates where within an achievement level a student's scale score falls by attaching decimal places to the usual achievement level designation. For example, a relative achievement level score of "3.48" would indicate that a student's scale score is in the middle of the Level III range for that test.

Reliability : The degree to which the results of an assessment are dependable and consistently measure particular student knowledge or skills. Reliability is an indication of the consistency of scores across raters, over time, or across different tasks or items that measure the same thing. Thus, reliability may be expressed as (a) the relationship between test items intended to measure the same skill or knowledge (item reliability), (b) the relationship between two administrations of the same test to the same student or students (test/retest reliability), or (c) the degree of agreement between two or more raters (rater reliability). An unreliable assessment cannot be valid.

Reliability Coefficients: Statistical estimates of the reliability of a test or measurement.

Reliability of Difference Scores: (see Difference Score Reliability).

Rubrics: Specific sets of criteria that clearly define for both student and teacher what a range of acceptable and unacceptable performance looks like. Criteria define descriptors of ability at each level of performance and assign values to each level.

S

Sample: A subset of a population.

SAT: Drill down to Test Information at: https://www.wcpss.net/domain/2340

Scales of Measurement: Different ways of categorizing measurement outcomes. There are four types:

- Nominal – the characteristics of an outcome that fits into one and only one class or category and are mutually exclusive (e.g., gender, ethnicity, political affiliation, etc.).

- Ordinal – the ordering of the things that are being measured (e.g., students can be ordered according to their class rank).

- Interval – A scale of measurement that is characterized by equal distances between points on some underlying continuum. An interval scale is marked off in units of equal size such that the distance between units is the same at each point along the scale.

- Ratio – A scale of measurement that is characterized by an absolute zero.

Scale score: A Scale Score is a score derived from an assessment which places the result of that assessment on a predetermined “number line” which allows it to be compared to other results from that same assessment (either comparing one test taker who takes the assessment multiple times, or comparing one taker’s result to that of another).

Scatterplot: A plot of paired data points which indicates the relationship between two variables.

School Assistance Module (SAM): A centralized database of student information that assists schools in management tasks. It is populated by Power School's data and has multiple components within the module.

School Performance Grades: Schools receive a letter grade under the General Assembly’s A-F School Performance Grades. The grades are based on the school’s achievement score and on students’ academic growth. The final grade is based on a 15-point scale.

Schools also have the opportunity to earn an A+NG for their School Performance Grade. Schools receiving this grade earned an A and did not have a significant achievement gap that was larger than the largest state average achievement gap. This additional designation was added in 2014-15 to address federal requirements that the highest designation not be awarded to schools with significant achievement gaps.

Scattergram: (see Scatterplot).

Scholastic Aptitude: The combination of native and acquired abilities that are needed for school learning; the likelihood of success in mastering academic work as estimated from measures of the necessary abilities.

Screening: A fast and efficient measurement to identify individuals from a large population who may deviate in a specified area, such as the incidence of maladjustment or readiness for academic work.

Selected-Response Item: A question or incomplete statement that is followed by answer choices, one of which is the correct or best answer. It is also referred to as a "multiple-choice" item, (see Multiple-Choice Item).

Significance Level: The likelihood that a statistical test will identify a relationship between variables when, in reality, that relationship does not exist, (see Level of Significance).

Slope: The ability of a test item to distinguish between examinees of high and low ability.

SMART Goals: Improvement goals that are strategic, measurable, attainable, results-oriented, and time-bound.

Standard Deviation (SD): A measure of the variability or dispersion of a distribution of scores. The more the scores cluster around the mean, the smaller the standard deviation. In a normal distribution of scores, about 68.3% of the scores are within the range of one S.D. below the mean to one S.D. above the mean. Computation of the S.D. is based upon the square of the deviation of each score from the mean, (see Normal Distribution).

Standard Error of Measurement (SEM): The amount an observed score is expected to fluctuate around the true score. For example, the obtained score will not differ by more than plus or minus one standard error from the true score about 68% of the time. About 95% of the time, the obtained score will differ by less than plus or minus two standard errors from the true score. It is a measure of the uncertainty inherent in measuring something with a single assessment. The SEM is frequently used to obtain an idea of the consistency of a person’s score or to set a band around a score. Suppose a person scores 110 on a test where the SEM is 6. We would thus say we are 68% confident the person’s true score was between (110–1 SEM) and (110+1 SEM), or between 104 and 116.

Standard Score: A general term referring to scores that have been "transformed" for reasons of convenience, comparability, ease of interpretation, etc. The basic type of standard score, known as a z-Score, is an expression of the deviation of a score from the mean score of the group in relation to the standard deviation of the scores of the group. Most other standard scores are linear transformations of z-Scores, with different means and standard deviations, (see z-Score).

Standardization: A consistent set of procedures for designing, administering, and scoring an assessment. The purpose of standardization is to ensure that all students are assessed under the same conditions so that their scores have the same meaning and are not influenced by differing conditions. Standardized procedures are very important when scores will be used to compare individuals or groups.

Standardization (or Norming) Sample: That part of the population that is used in the norming of a test, i.e., the reference population. The sample should represent the population in essential characteristics, some of which may be geographical location, age, or grade for K-12 students.

Standardized Testing: A test designed to be given under specified, standard conditions to obtain a sample of learner behavior that can be used to make inferences about the learner's ability. Standardized testing allows results to be compared statistically to a standard such as a norm or criteria. If the test is not administered according to the standard conditions, the results are generally considered invalid.

Standards: The broadest of a family of terms referring to statements of expectations for student learning, including content standards, performance standards, and benchmarks, (see also Norms vs. Standards).

Stanine: A unit of a standard score scale that divides the norm population into nine groups with the mean at stanine 5. The word stanine draws its name from the fact that it is a Standard score on a scale of NINE units.

Comparison Table of Stanines and PercentilesStanines

Approximate Percentiles

Percentage of Examinees

9 - Highest Level

96-99

4%

8 - High Level

90-95

7%

7 - Well above average

78-89

12%

6 - Slightly above average

60-77

17%

5 - Average

41-59

20%

4 - Slightly below average

23-40

17%

3 - Well below average

11-22

12%

2 - Low Level

5-10

7%

1 - Lowest Level

1-4

4%

Statistical Significance: (see Significance Level).

Statistics: Analytic tools concerned with the collection, analysis, interpretation, explanation or presentation of data in an effort to help describe and make inferences about a sample and the population from which it was drawn, or about the relationship between variables.

Stem: The actual test question in a multiple-choice format is referred to as the stem.

Student Achievement: (see Achievement Data).

Students with Disabilities (SWD). A broadly defined group of students with physical or mental impairments such as blindness or learning disabilities that might make it more difficult for them to learn through the same methods or at the same rates as other students. Students identified as SWD should always have a current IEP. This student group is also one of the AMO subgroups.

Subgroup: A subset of a population who all share some characteristic.

Summative Assessments: Assessments that determine how much a student knows at a given point in time (e.g., end of a quarter, semester, year, etc.).

Survey Research: The process of acquiring information about one or more groups of people by asking them questions and tabulating their answers.

T

Teacher Turnover: A measure of the percentage of teachers no longer in teaching roles at that same school as compared to the previous year.

Tests: For a list (by grade level) of the required assessments administered in WCPSS, go to: https://www.wcpss.net/domain/2340

Test Battery: (see Battery).

Test Blueprint: The testing plan, which includes numbers of items from each objective to appear on a test, and the arrangement of objectives.

Test Item: (see Item).

Test Objective: (see Objective).

Test of Statistical Significance: The application of a statistical procedure to determine whether observed differences exceed the critical value, indicating that chance is not a likely explanation for the results.

The ABCs: The ABCs of Public Education was North Carolina’s comprehensive plan to improve public schools. Starting in 2012-13 school year, the READY Accountability Model replaced the ABCs. The ABCs plan was based on three goals: 1) Strong Accountability; 2) Mastery of Basic Skills; and 3) Localized Control. The model used End-of-Grade tests in grades 3-8 and End-of-Course tests in grades 6-12. Components for drop out, enrollment in the college/university courses of study and competency were included in 9-12. The model included standards for the performance and growth of student achievement:

- ABCs Expected Growth: Met when a school’s average of academic change scores for students was 0 or greater. Academic change measured how close individual students came to their predicted performance. Starting in 2012-13 school year, the new READY Accountability Model was implemented.

- ABCs Growth Model: In this model, students were expected to do at least as well this year as they have in the past, compared to other NC students who took the same statewide test in the year standards were set (usually the first year the test was given). Schools met “expected growth” if students, on the average, showed a year of growth in a year’s time. Schools met “high growth” if 60% of students in a school met their individual growth targets. Starting in 2012-13 school year, the new READY Accountability Model was implemented. Growth is now calculated using the EVAAS model, (see EVAAS).

- ABCs Performance Composite: The percentage of students' scores at or above grade level. The composite reflects the percentage of scores at grade level across all tested subjects. All students tested are included in analyses. Starting in 2012-13, the North Carolina Department of Instruction implemented the new READY Accountability Model, (see READY Performance Composite).

- ABCs Standards -- Awards and Recognitions: ABCs Awards used to recognize school excellence based on both student growth and performance. Several categories existed, the highest of which was Honor School of Excellence and School of Excellence. Both recognized schools that showed Expected growth, and had a performance composite of 90% or higher of their scores at or above grade level. "Honor" was added for schools also meeting AYP federal standards. School recognitions were based on student performance and staff bonuses were based on growth. Starting in 2012-13 school year, the new READY Accountability Model was implemented.

Threshold: The point on the ability scale where the probability of a correct response is fifty percent. Threshold for an item of average difficulty is 0.00.

T-Score: A standard score with a mean of 50 and a standard deviation of 10. Thus a T-Score of 60 represents a score one standard deviation above the mean.

Triangulation: A process of combining evidence or data from multiple sources in order to provide support for a conclusion. The use of triangulation supports a central finding and overcomes the weakness associated with a single methodology.

True Score: The actual score that someone would obtain on a test if their ability was measured with 100% accuracy.

Type I Error: Same as the level of statistical significance – the level of risk you are willing to take that the null hypothesis is rejected when it is true.

Type II Error: The likelihood that a relationship between two variables exists if it fails to show up in a single study.

V

Validity: The extent to which something measures what it purports to measure. Validity indicates the degree of accuracy of either predictions or inferences based upon a test score or other measure. The term validity has different connotations and, therefore, different kinds of validity evidence are appropriate for various measurement situations.

- Content validity: For achievement tests, content validity is the extent to which the content of the test represents a balanced and adequate sampling of the outcomes (domain) about which inferences are to be made.

- Criterion-related validity: The extent to which scores on the test are in agreement with other measurements taken in the present (concurrent) or future (predictive).

- Construct validity: The extent to which a test measures some relatively abstract psychological trait or construct; applicable in evaluating the validity of tests that have been constructed on the basis of an analysis of the trait and its manifestation.

- Face validity: An estimate of whether a test appears to measure a certain criterion; however, it does not guarantee that the test actually measures what it purports to measure. Face validity relates to whether a test appears to be a good measure or not. This judgment is made on the "face" of the test, thus it can also be judged by an amateur.

Variability or Variance: A measure of statistical dispersion of scores.

W

Weighting: The process of assigning different emphasis to different scores in making some final decision.

WINSCAN Program: Proprietary computer program that contains the test answer keys and files necessary to scan and score multiple-choice tests. Student scores and local reports can be generated immediately using the program.

Z

z-Score: A type of standard score with a mean of zero and a standard deviation of one. It is a statistical measure of how far a data point (in standard deviations) is from the average, (see Standard Score).

-